These are the Tools Open Source Researchers Say They Need

What type of tools do you need?

That’s what we asked back in February when we circulated a survey among open source researchers about their use of online tools when conducting online research. That can mean anything from a simple search engine accessed through a browser to an intricate, custom built scraper for which you must use the command line.

More than 500 people took the time to answer our questions, telling us about their experiences using (or failing to use) various online tools.

We conducted our survey to help the wider open source developer community learn what tools could really help researchers if they were to be built.

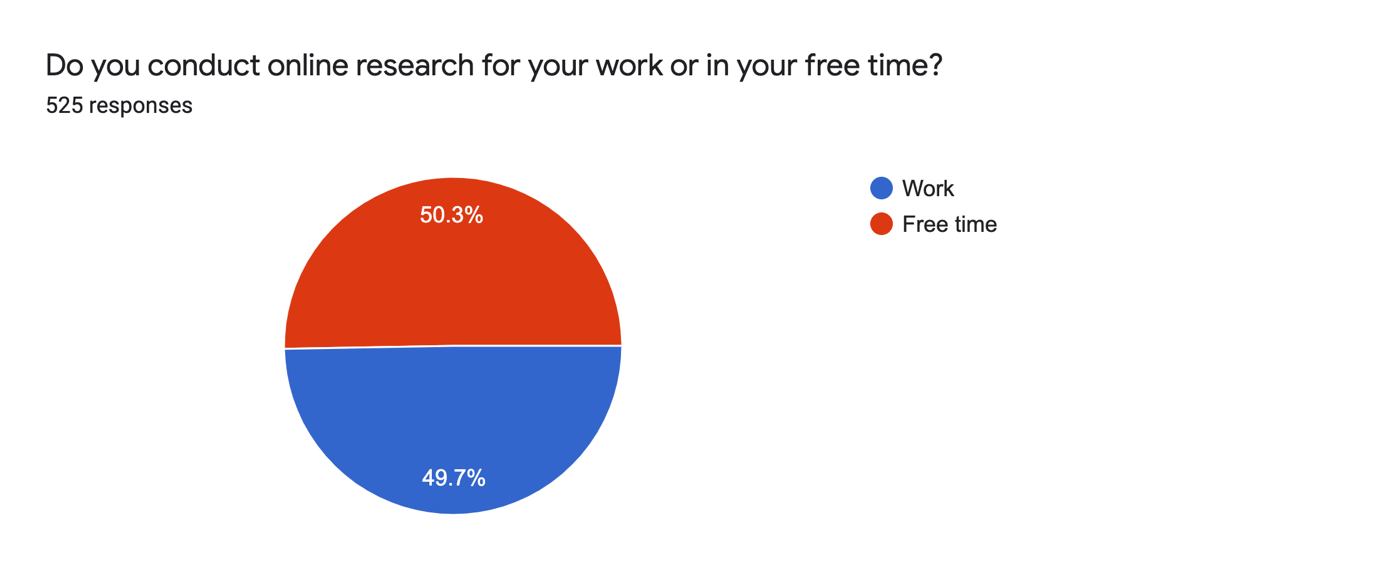

These survey results show that the online researcher community is highly diverse. Those who conduct open source investigations do not only come from a wide range of professional backgrounds — about half of them do this type of work in their spare time.

Researchers told us that the tools they are most likely to use need to be free, clearly describing what they are capable of doing and how they can be used. Given that only a quarter of our respondents knew how to use the command line, tools which do not require more advanced technical skills are particularly welcome.

Nearly 200 of our respondents provided concrete suggestions for tools which could help them in their work, which we have provided in a publicly-accessible spreadsheet.

Who Responded?

We received 525 responses to our survey. Those who took part came from a wide range of professional backgrounds, from cyber security analysts and journalists to corporate security specialists and academics. Importantly, not everybody conducts this type of research as their day job. Although volunteers play a significant role in the open source community, we were surprised to see that roughly half our respondents did this research during ‘work’ and the other half in ‘free time’.

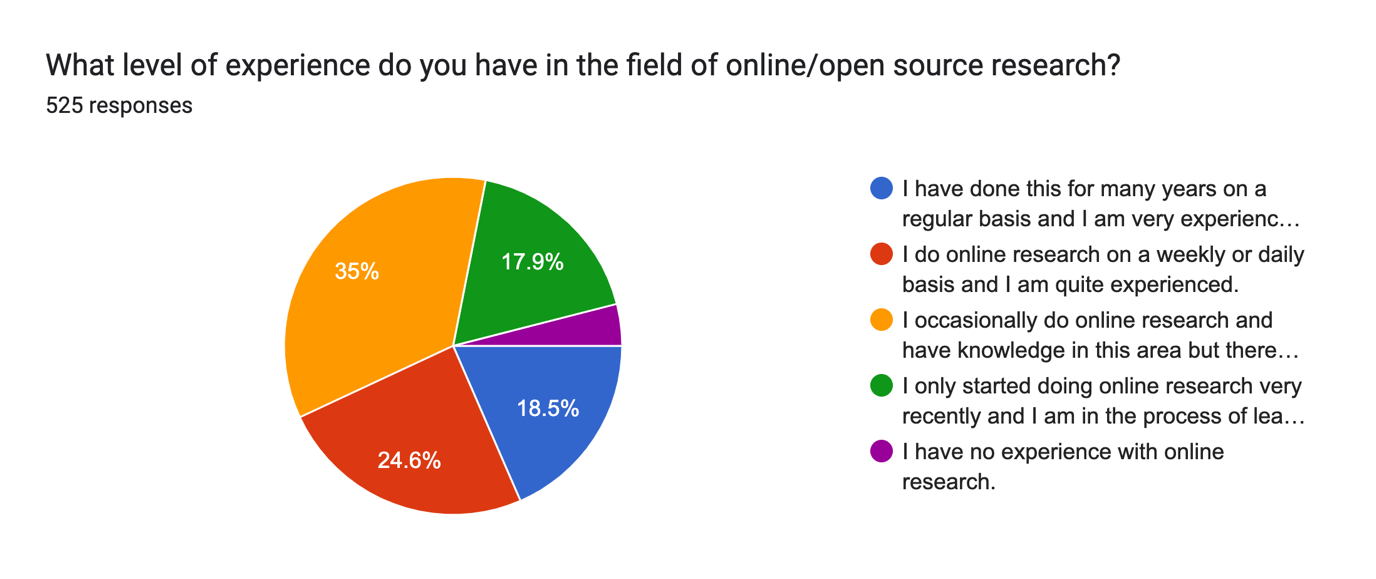

The respondents were also diverse in their level of online research experience. The biggest group (35%) indicated that they occasionally conduct online research but still see a need to improve their skills

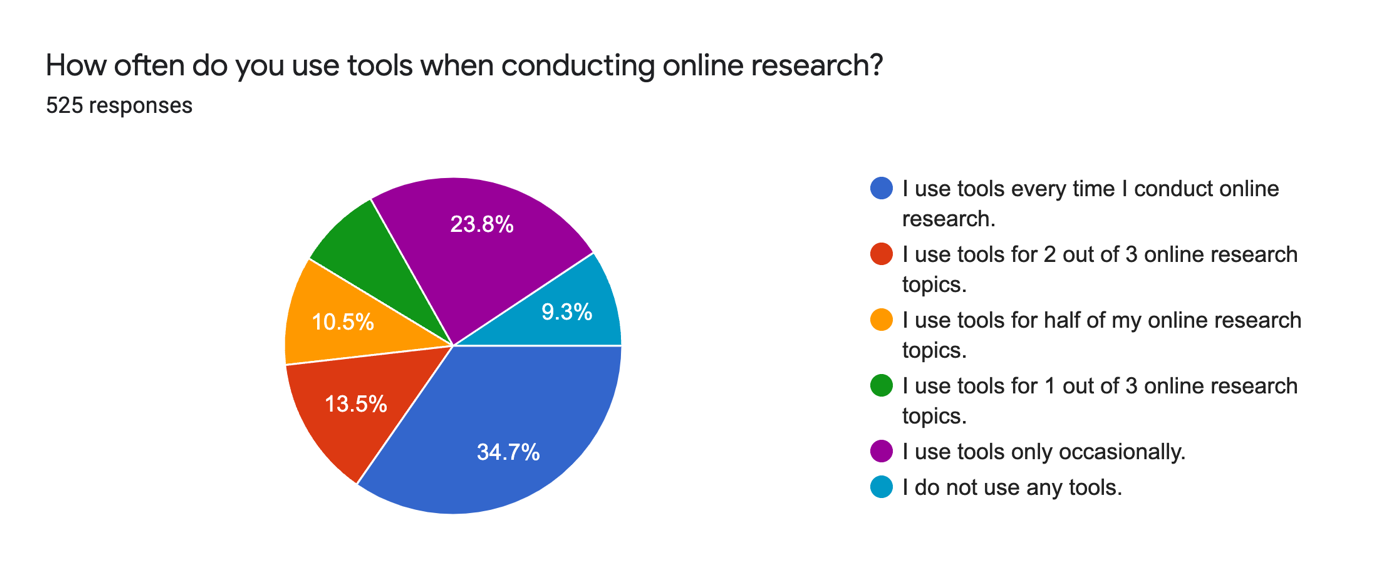

We also asked researchers how often they used tools in their work — our definition of a tool included any digital means which helps perform specific tasks in online research. To provide examples, we linked in the survey to Bellingcat’s Online Investigation Toolkit. Here, the biggest group (34.7%) reported that they use tools whenever they do this type of work, though understandings of the term may have differed.

Researchers’ Favourite Tools Named

We wanted to know the survey participants’ favourite tools and whether they could describe a time when using a specific tool had been particularly helpful in one of their investigations.

The answers to both questions were sometimes very case specific — they included many tools that were only named one or two times — but there were also clear tendencies.

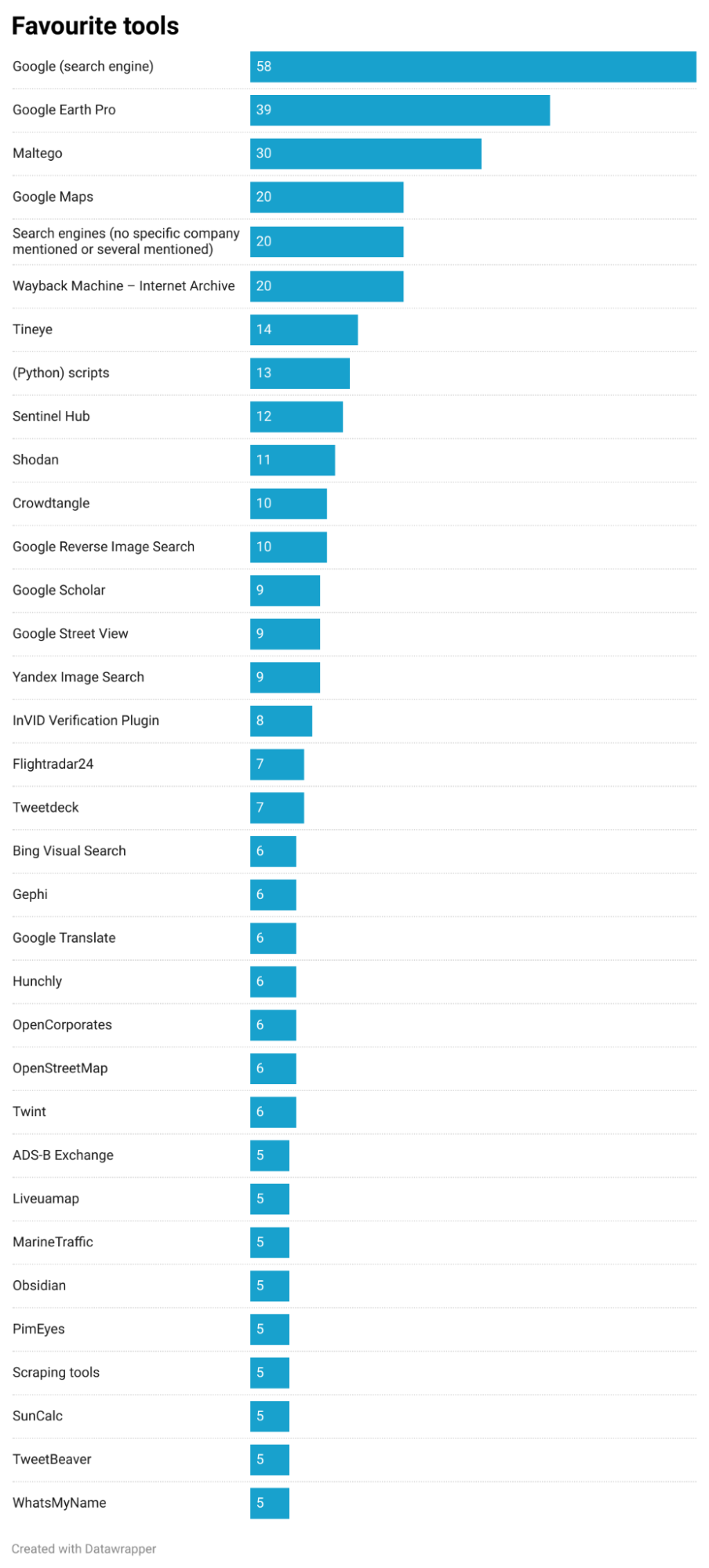

List of favourite tools. Only tools that were mentioned at least five times were included. It was possible to name several tools at once. The number behind the tool name represents the number of times this answer was given.

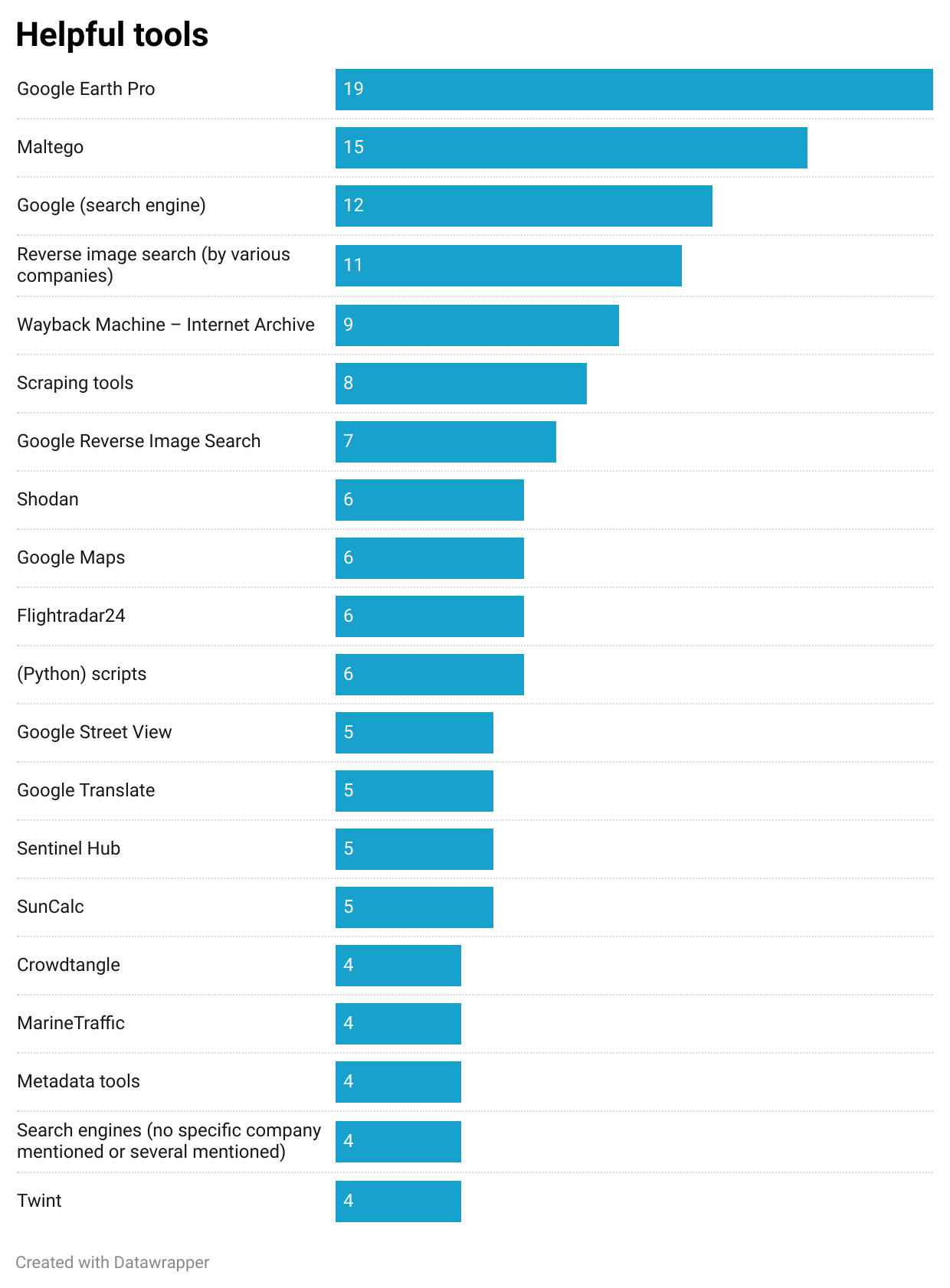

List of the tools that online researchers considered most useful during one of their research cases. Since we received less answers for the second question, we included all tools that were mentioned at least four times. It was possible to name several tools at once.

The winner in terms of favourite “tools” was the search engine Google. Even experienced researchers who used many other tools would often return to it. Those with less experience reported that Google was often the first and only “tool” they had used so far.

The second most favourite tool and at the same time the one that was considered as most helpful for open source investigations was Google Earth Pro. Many respondents mentioned the free access to satellite imagery, both historical and current, as crucial for geolocation.

The graphical link analysis tool Maltego was the third favourite and second most helpful tool. We could not always determine respondents used Maltego’s free or paid edition. The survey respondents explained that Maltego helped them find hidden connections between people or organisations and to gather information related to a research case at one place.

The vast majority of researchers’ favourite tools — from flight trackers to social media monitors — could be used for free or at least had a basic free version. Amongst online researchers’ favourite tools was also the Wayback Machine, a digital website archive.

Although respondents found several scraping tools helpful, they did not show up at the top of the favourite tools list. A potentially related finding, given that scraping tools often require use of the command line, is that those researchers who are able to write scripts of code themselves in order to automate parts of their research often considered those scripts to be their favourite tools. Several online researchers mentioned that they write their own code based on the needs of a specific research case. Others indicated that they are able to use scripts that they find on code sharing platforms like GitHub.

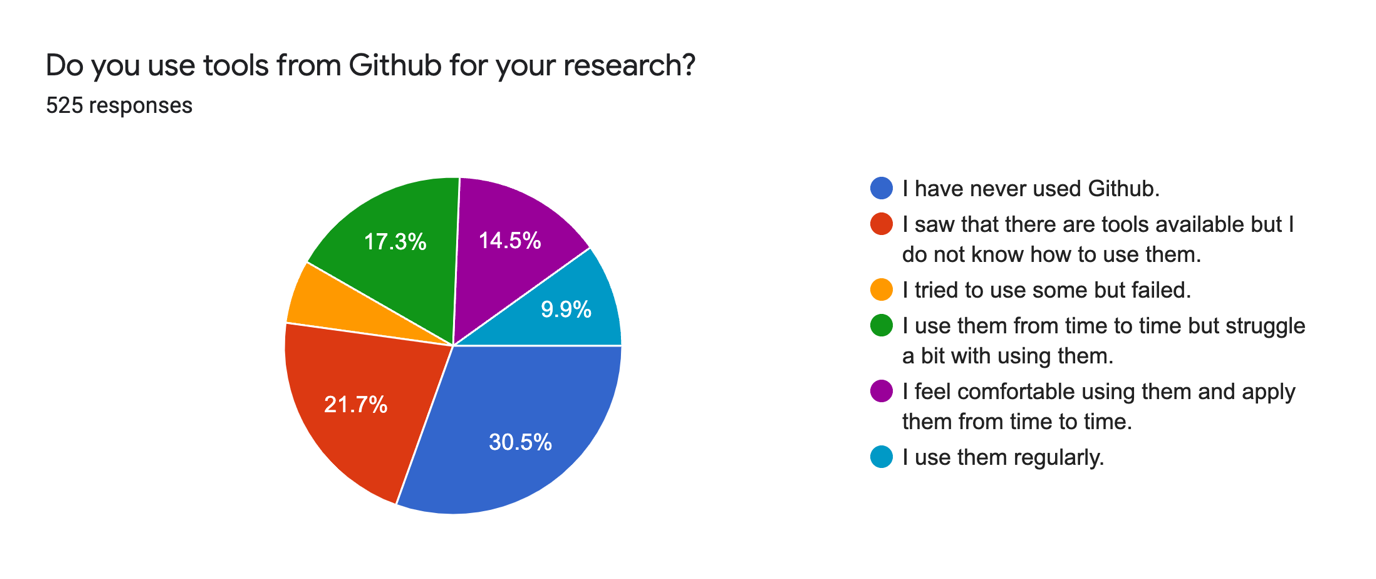

However, only the more tech-savvy members of the worldwide open source investigator community have access to this large world of available tools. More than 30% of our survey respondents have never used GitHub. Another 22% are aware that GitHub offers tools but they don’t know how to use them. A further 23.4% tried to use GitHub tools but struggled or failed. Only around 24% indicated that they use such tools from time to time or on a regular basis.

Therefore, developers who build tools for online researchers will currently only reach a quarter of our respondents if they make their tool only available via services like GitHub, rather than a web or desktop app with user interface.

Least Popular Tools

We also asked our respondents whether there were any tools that they didn’t like or whether they had experienced situations when a specific tool did not help but slowed them down.

Not everyone answered these questions but the limited data we received nevertheless provided insights.

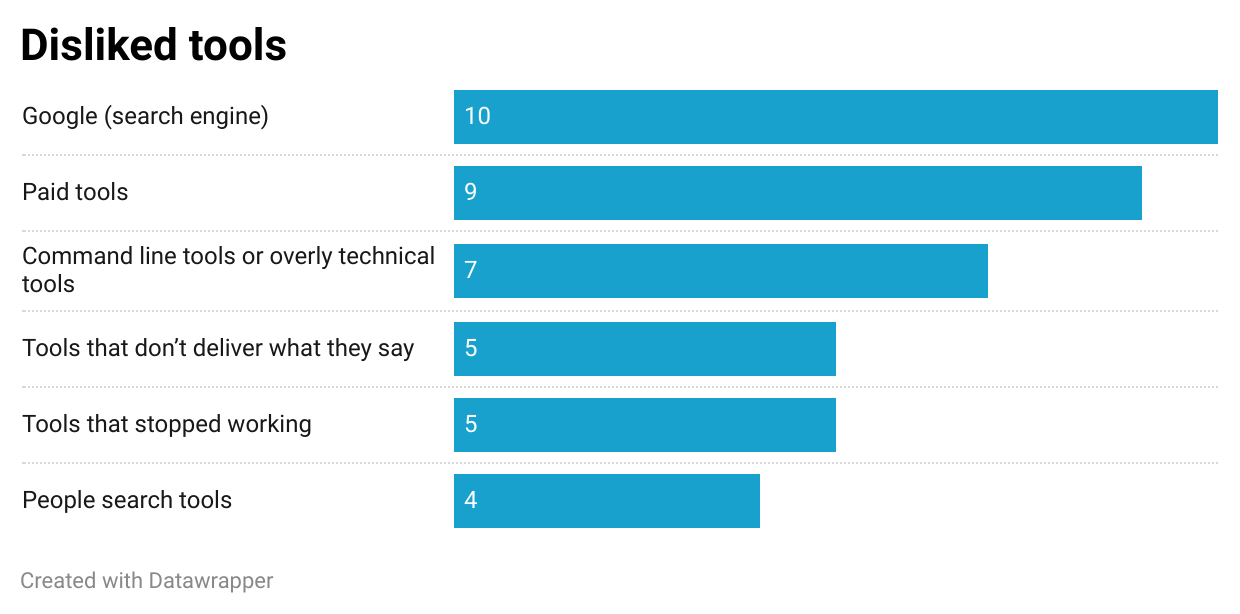

Disliked tools. Only tools or tool types that were at least mentioned four times were included in this list.

In this case the most disliked tool was Google’s search engine — exactly the same as the favourite tool mentioned earlier. Far fewer respondents (10) indicated the search engine as a tool they did not like compared to those who named it as one of their favourite tools (58).

Critics did not appreciate that Google showed them results that were influenced by their previous searches and mistrusted Google’s use of their own data. Eleven respondents felt that the search engine did not provide sufficiently precise results for the keywords they entered.

The second most disliked tools were those that cost money. One respondent wrote: “As most of my research is at a hobbyist level I can’t really afford subscriptions to some tools that otherwise look useful.” Other respondents pointed out that they were often disappointed when using paid tools because it seemed that such tools were overpriced or did not provide much value compared to free tools.

Some online researchers shared that they struggled to use command line or overly technical tools. “I currently don’t like Python-based tools because my skillset there is low, and I don’t have the flexibility or time to overcome that at the moment”, one person explained.

Several respondents unfavourably compared the marketing language used to sell a tool with its real capabilities. Equally unpopular were tools that had stopped working without an update from the developers. “Nothing’s worse than setting up an environment, and installing a tool only to find out it doesn’t function as advertised”, wrote one respondent.

Also mentioned were people search tools – meaning tools such as Pipl or Skopenow which show any blogs, social media accounts or mail accounts they can find online for a specific person. Survey respondents thought that such tools either did not represent good value for money or that the quality of the results fluctuated.

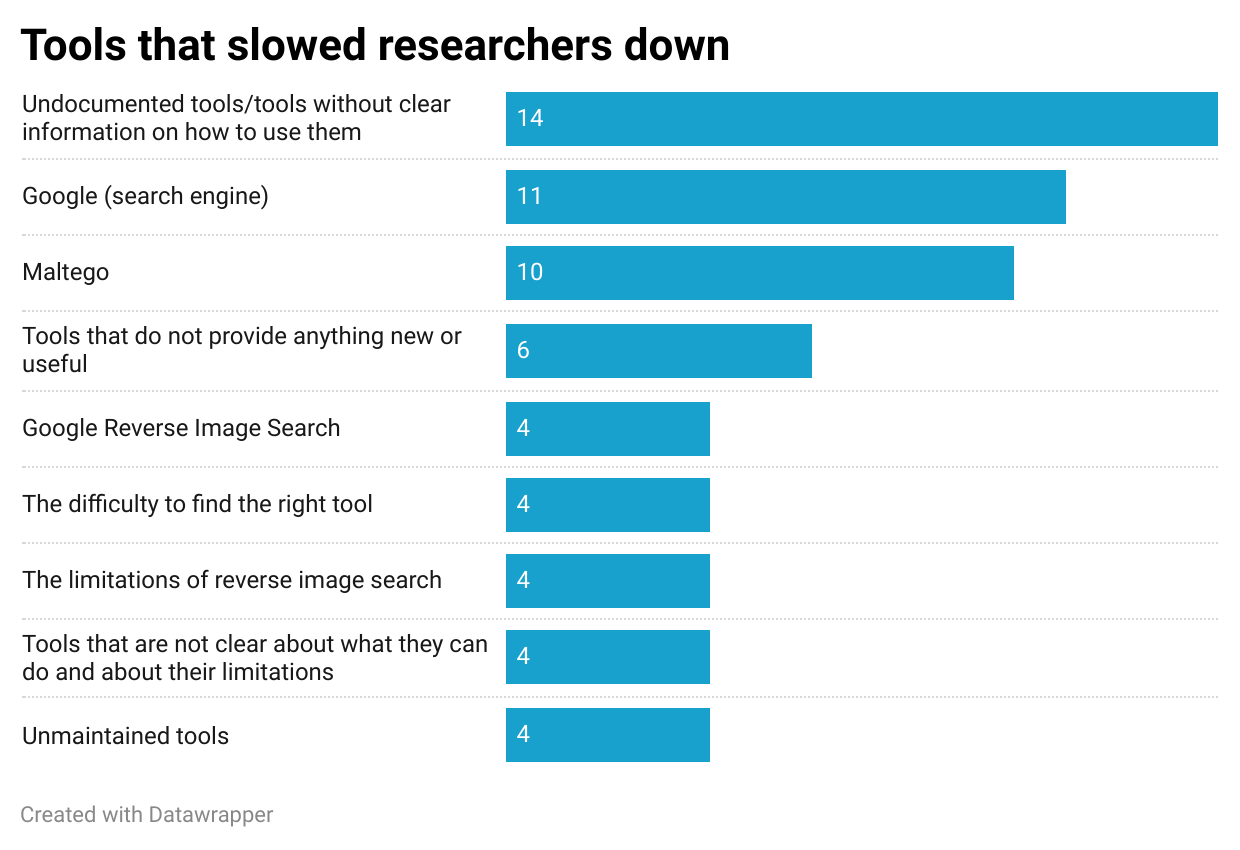

Tools that did not add anything to an investigation but rather slowed the researcher down. Only tools or tool types that were at least mentioned four times were included in this list.

When asked which tools slowed them down and why, respondents pointed to tools with poor quality instructions or documentation. Several described situations when even after setting up a tool, they were unable to understand how to use it.

Although they named Maltego as a well-liked tool, some of the researchers also had points of criticism. For instance, some found it cumbersome. One wrote: “It is complicated to use, and once the data is in, it has to be reworked so much to be understandable and presentable, that it is not worth it for the type of investigation that I perform.”

Respondents also complained about tools that did not provide anything new or useful, which once used led them to conclude “I could have done everything faster manually”. They felt the same about tools that had been released prematurely, as useful features that could add value were not yet integrated.

Some researchers expressed disappointment about the limitations of reverse image searches. A small number complained specifically about results provided by Google Reverse Image Search — One person wrote: “it changes the picture into text description and searches by that” pointing out that this tended to not provide helpful results. Some also thought the quality of the results had decreased over time. Other online researchers found that reverse image search techniques in general – not only Google’s – did often not provide useful results.

Other respondents pointed out that tools that are not clear about what they can do and what they are not able to do slowed them down. “There have definitely been occasions where a tool has seemed like it might have the functions needed but then doesn’t. So there is time spent in watching limited tutorial videos or searching through limited documentation, installing and/or bug-shooting packages, only to find that it doesn’t have the function you’ve ultimately been searching for”, one person wrote.

Finally, unmaintained tools were also seen as a factor that can slow down the work of researchers.

Open Source Challenges

We asked respondents to share the aspects of their work that they find most challenging. They described these challenges in their own words, which we then categorised into common themes.

Only challenges that were mentioned at least five times were included in this list.

Forty-nine out of the 525 participants described verification of online sources – that is, the whole field of photo and video verification – as their biggest challenge. Some specifically mentioned the difficulties in verifying where a photo or video was originally posted online or ways to check the trustworthiness of websites.

In a similar vein, many researchers indicated that they struggle with finding reliable and high quality sources – whether websites or individual content producers. “Knowing where to look, what sources to trust, particularly if it is a new area for me”, one explained.

Respondents also wrote that they struggle to find sources of data which are both free to access and up to date.

A frequent concern was the large amount of information with which researchers are confronted. “Too much information in too many places, too many results”, one respondent wrote. Other researchers described that due to “the sheer volume of information” they tend to get lost or are not sure what to focus on.

In addition, researchers felt overwhelmed by the task of identifying the right tools for their research. “I often face trouble remembering and navigating through the wide variety of tools available”, one person explained. Even when they found useful tools, another wrote, it was hard to know how to choose between them.

Translations also featured in these responses. On one hand, researchers found limitations when looking for online translation services for less widely used languages. They also complained of poor quality translations for some larger languages such as Arabic. On the other hand, even when they had success, researchers still encountered challenges searching for the translated phrases — they feared that certain nuances or connotations may have been lost in translation, affecting the search results.

Respondents also wrote that it was difficult to organise information they had discovered. They wanted the ability to collect online sources of various formats in one place, so as to keep track of their ongoing research or to later revisit it. One person wrote: “I have images saved on my photos, screenshots saved in photos, other info saved as pdf in the Books app, and so on. One tool for all would be great.”

Others felt limited due to their lack of coding skills. In some cases, this hampered their ability to automate a certain step of their research workflow. Several researchers saw the need to use command line tools but did not know how. “Not knowing how to code or interact with code, Github is useless for me and it’s my mistake honestly, I need to get a hold of this”, wrote one respondent.

Another obstacle was the perceived lack of time to conduct research. As one respondent put it: “So much data, so little time”. Another found it particularly challenging to make “crucial decisions for the rest of the investigation based on time management/time”.

A further challenge was a perceived lack of online research experience.

Some respondents also said they struggled with making sense of the online information they collected, or “connecting the dots between the scattered info”.

Other respondents found it difficult simply knowing where to start with an online investigation.

Compounding this challenge was the fear of manipulated information or footage, the difficulty of finding relevant datasets and the complexities of narrowing down search queries to the most useful list of keywords or phrases.

We asked respondents to recall a situation in which they were stuck with their research and describe the tool which could have helped them proceed. Most of the ideas were shared by only one or two of the online researchers and they are therefore not representative for the whole group of our survey respondents. However, we recommend aspiring tool developers to have a look at the list of tool needs to get some insights into very concrete tool ideas that nearly 200 of our respondents identified based on their work.

How do researchers find tools?

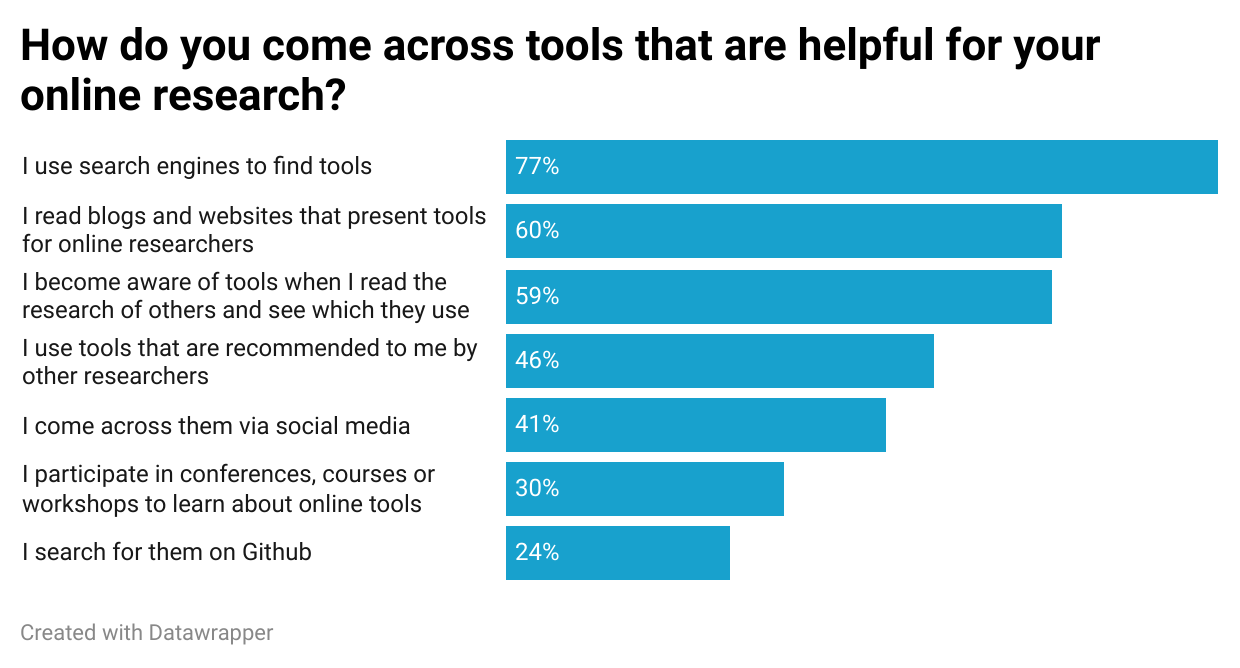

All 525 survey respondents answered this question. Most of them used a combination of several channels to learn about tools.

Search engines were the most common way to find tools. Perhaps surprisingly, social media networks did not occupy second or even third place – these were taken by blogs or websites that presented tools, and by reading the work of other researchers.

Survey respondents were allowed to select several answers at once.

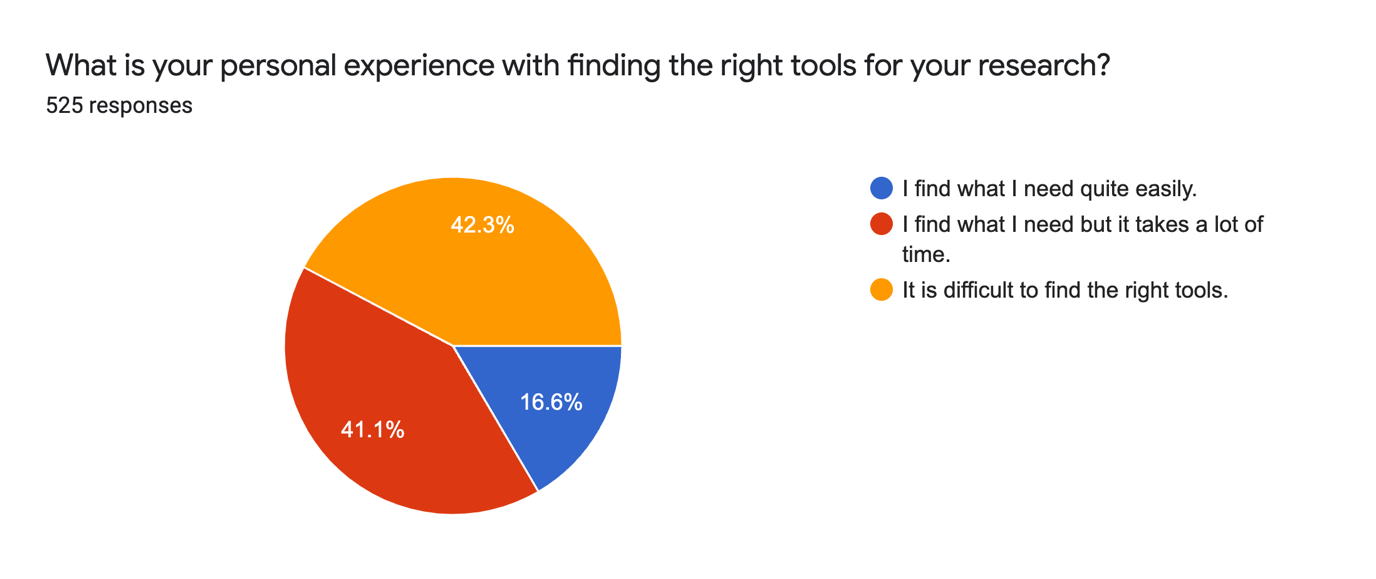

Nevertheless, the majority of open source researchers still struggle to find the right tools for their work. More than 83% of the survey respondents find it either difficult or time-consuming to find the right tools.

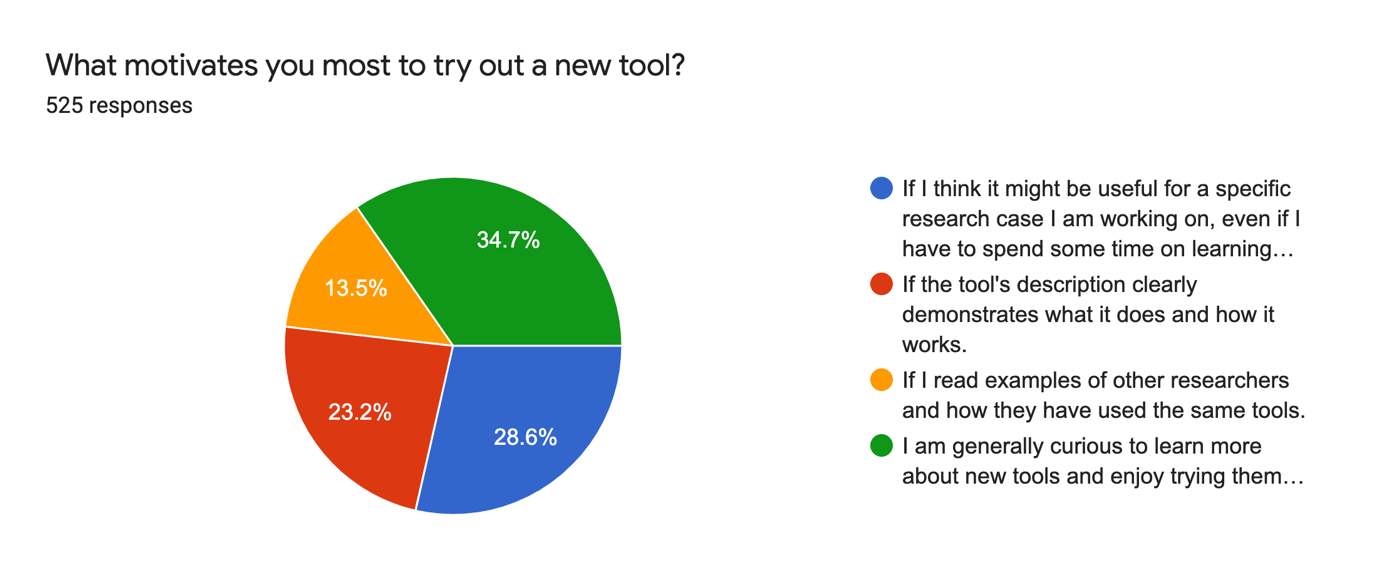

When asked what motivates them to try out a new tool, around 35% of our respondents can be easily convinced to try it out. This category of online researchers considers themselves as “generally curious to learn more about new tools” even if those tools aren’t directly relevant to their work.

The second largest group, just under 29%, indicated that they try out a new tool if they think it might help with specific research. In this case, they are even ready to spend some time learning how to use the tool.

The complete text for the blue category was: “If I think it might be useful for a specific research case I am working on, even if I have to spend some time on learning how to use the tool first.” The complete text for the green category was: “I am generally curious to learn more about new tools and enjoy trying them out even if I am not immediately working on a specific research project for which they could be useful.”

The third group, around 23%, said they felt motivated to try out a new tool if the tool’s description clearly demonstrates what it does and how it works. And 13.5% indicated that they like to try out a new tool after reading how other researchers have used it.

Lessons for Tool Developers

These results clearly show that open source researchers have specific criteria for the tools they would like to see.

First and foremost, tool developers who want to reach a large percentage of this community need to offer tools for free. Since many online researchers do this type of work in their spare time or belong to small teams with tight budgets, they are often not able to pay for access to tools.

Researchers also need detailed documentation on how exactly a tool can be used. If online researchers do not find step-by-step instructions and accessible explanations, many will get frustrated and will most likely not come back to the tool. They also expect to be informed when a tool stops working.

Online researchers also expect transparency from developers about the limitations of their tools. They are very averse to marketing language and efforts to oversell a tool’s capabilities.

Tools developers who create apps with user interfaces have the potential to reach significantly more online researchers than those who provide command line tools. Currently, a significant part of the worldwide online researcher community is unable to use the command line. However, a number of researchers have become aware that their lack of technical skills are a limitation and are eager to learn more.

Overall, many online researchers are very open and curious to try out new tools. There are many areas in which new tools could potentially have a huge impact on the work of open source researchers. The efforts of developers who dedicate their time to making open source and online research easier and more effective will be highly welcomed — particularly if they take this feedback into account.

Logan Williams contributed visualisations to this text.

The Bellingcat Investigative Tech Team develops tools for open source investigations and explores tech-focused research techniques. It consists of Aiganysh Aidarbekova, Tristan Lee, Miguel Ramalho, Johanna Wild and Logan Williams. Do you have a question about applying these methods or tools to your own research, or an interest in collaborating? Contact us here.