Faking It: Deepfake Porn Site’s Link to Tech Companies

This article is the result of a collaboration with German YouTube channel STRG_F. You can watch their documentary here.

Warning: This article discusses non-consensual sexually explicit content from the start.

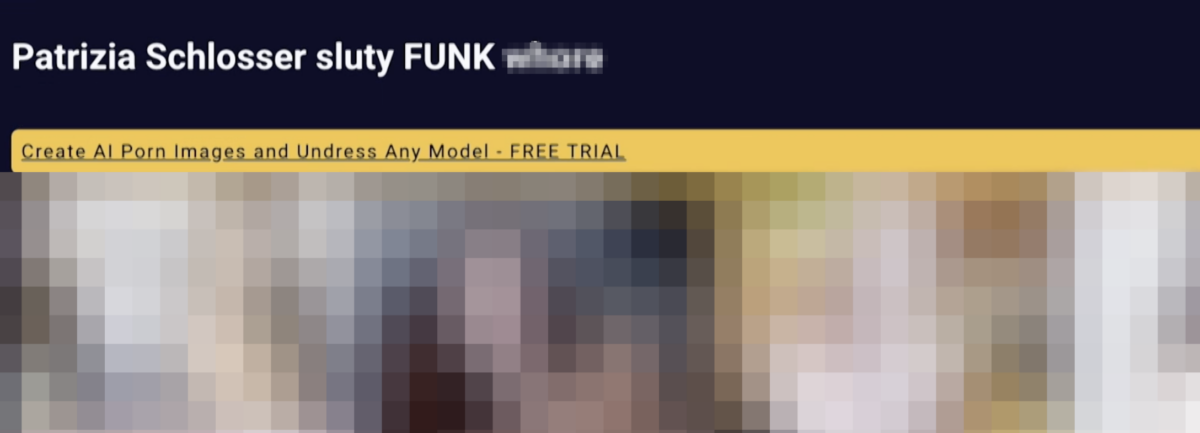

The graphic images claim to show Patrizia Schlosser, an investigative reporter from Germany. She’s depicted naked and in chains.

“At first I was shocked and ashamed – even though I know the pictures aren’t real,” said Schlosser, who believes that she may have been targeted because of her reporting on sexualised violence against women.

“But then I thought: no, I shouldn’t just keep quiet, I should fight back.”

Schlosser decided to track down the man who posted the doctored photos of her to a deepfake pornographic website. “At first I had the same impulse as many other victims: it’s not worth it, you’re powerless,” she said.

“But it turns out that we are not.”

Schlosser, like a growing number of women, is a victim of non-consensual deepfake technology, which uses artificial intelligence to create sexually explicit images and videos. Actresses, musicians and politicians have been targeted.

But it’s not only celebrities whose images have been used without their consent – it is now possible to create hardcore pornography featuring the facial likeness of anyone with just a single photo. Many non-public figures have been impacted, including in the UK, the US and South Korea.

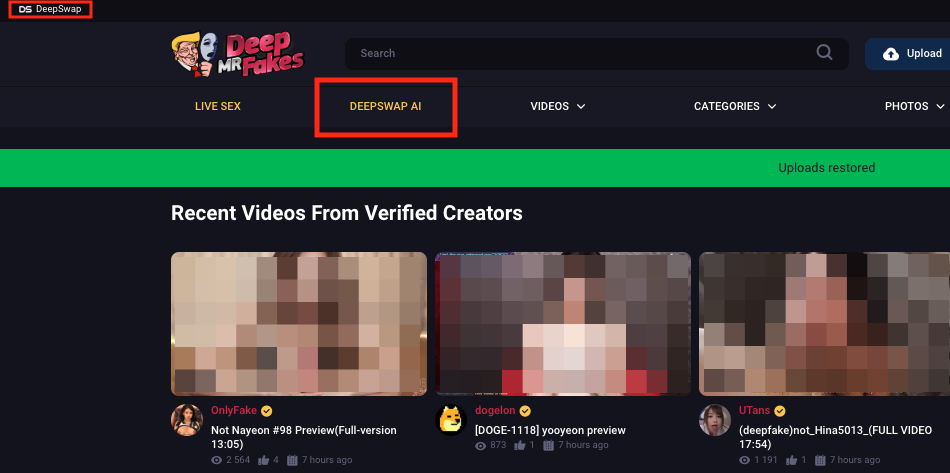

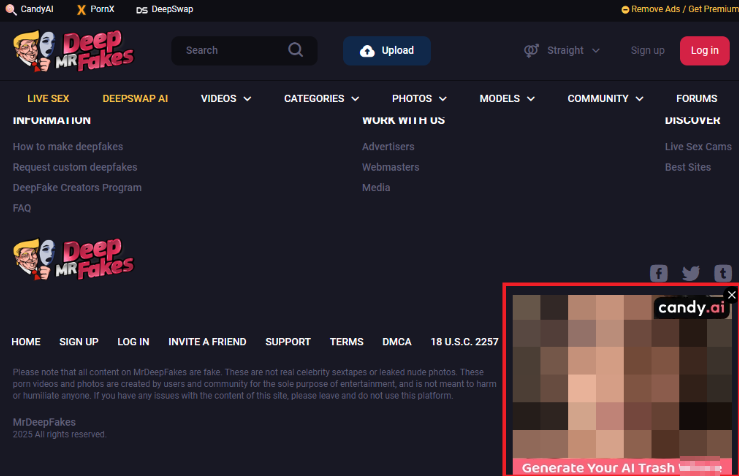

The most notorious marketplace in the deepfake porn economy is MrDeepFakes, a website that hosts tens of thousands of videos and images, has close to 650,000 members, and receives millions of visits a month.

According to an analysis by our publishing partner STRG_F, the explicit content posted to MrDeepFakes has been viewed almost two billion times. The album claiming to show Schlosser – which included photos with men and animals – was online for almost two years.

The MrDeepFakes website contains no information about who is behind the operation, and its administrators appear to have gone to great lengths to keep their identities secret. Since its inception, the site’s hosting providers have bounced around the globe.

Its financial operations also point to a calculated effort to obscure ownership. Premium memberships can be bought with cryptocurrency via a system that uses a new address for each transaction, making it virtually impossible to track the beneficial owners. Transactions through PayPal, intermittently available on the site, link to numerous accounts under unverifiable names.

While it has not yet been possible to uncover who is behind MrDeepfakes, the website reveals some clues about two independent apps that have been prominently advertised on the site. One leads back to a Chinese fintech firm that conducts business globally and is traded on the Hong Kong stock exchange. The other is owned by a Maltese company led by the co-founder of a major Australian pet-sitting platform.

Deepswap

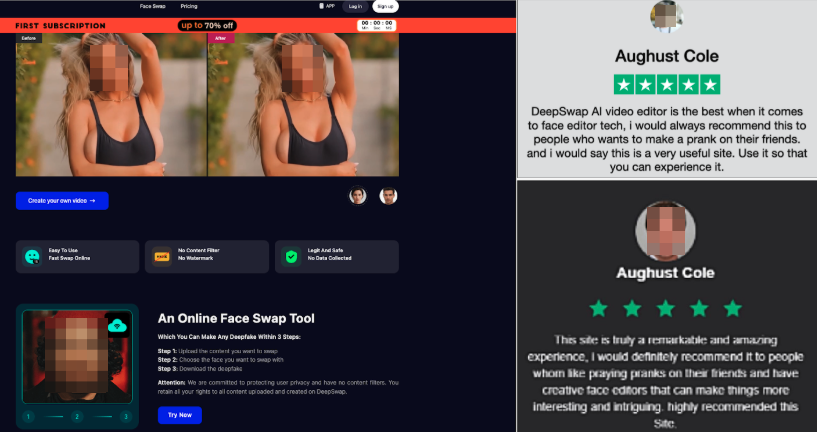

Deepswap AI is permanently linked on the top bar of the MrDeepFakes website. It is a tool that allows users to create lifelike deepfake photos and videos for US$9.99 per month. Pop-up ads for the app on MrDeepFakes have included images and videos captioned with “Deepfake anyone you want” and “Make AI porn in a sec”. The app’s website says it is “redefining the face swap industry” and a series of five star reviews – attributed to users with the same name but different profile pictures – praise the app for its ease of use.

The website contains no obvious information about the people or businesses behind it. However, its privacy policy says the app is maintained in Hong Kong. A Google search for mentions of “Hong Kong” on the site returns a company information page along with contact details. The company, called Deep Creation Limited, is based in a high-rise building in central Hong Kong.

Deepswap’s website linked to apps on both the Google Play and Apple stores. DeepSwap PRO was advertised on the Google Play Store until last week, where it had been downloaded more than 10,000 times. In response to questions from Bellingcat, a Google spokesman said the app was “suspended and no longer available”. The app’s developer was listed as an entity called Meta Way.

The Deepswap app is no longer available on the Apple Store. The app currently linked to the Apple Store from Deepswap’s website is described as an AI-powered “personal outfit gallery”. Archived pages of the app that is no longer available show the full name of the developer is Metaway Intellengic Limited, a company based in Hong Kong.

Hong Kong’s Companies Registry is available to the public and charges a modest fee for access to corporate information, including the identities of company directors and shareholders. A search of the register shows the sole director of Metaway Intellengic is a Mr Zhang, a resident of Hong Kong’s bordering city Shenzhen.

Metaway Intellengic’s 100 percent shareholder is Deep Creation Limited, whose sole shareholder, in turn, is a company in the British Virgin Islands called Virtual Evolution Limited. Zhang is also the director of Deep Creation Limited and signed off on incorporation documents for the Deepswap-linked app currently listed on the Apple store on behalf of Virtual Evolution Limited, which is listed as a “founder member” for the app, according to records obtained from the Hong Kong registry.

An online search for the three companies returned several mentions in PDFs related to another Shenzhen-based company – Shenzhen Xinguodu Technology Co., Ltd. Better known as Nexgo, the publicly traded company provides technology to facilitate payment devices like card readers. On the surface, Nexgo does not appear to be the type of entity associated with deepfake pornography. It is listed on the Hong Kong Stock Exchange and operates globally, with subsidiaries in Brazil, Dubai and India.

Nexgo’s financial reports from both 2023 and the first half of 2024 refer to both Deep Creation Limited and Virtual Evolution Limited as “subsidiaries of associates”. Its 2023 annual report also shows a 1,726,350 Chinese yuan stock transfer (approximately US$238,000) between Nexgo and Virtual Evolution Limited, although the direction of the transfer is unclear.

Kenton Thibaut, a senior resident China fellow at the Atlantic Council’s Digital Forensic Research Lab in Washington DC, said Chinese entities engaged in “questionable behaviour” are often structured this way.

“It’s par for the course that you’ll have a parent company and then a very long list of subsidiaries that are registered in Hong Kong, because Hong Kong has a different legal structure than mainland China,” she said. “You want six or seven levels of distance between the main parent company and then whatever company is doing the main business. This is how many Chinese companies engage in questionable behaviour.”

Nexgo and Deepswap had not responded to multiple requests for comment as of publication.

China: Deepfakes and Data

The Chinese government has passed a number of laws prohibiting the use and dissemination of deepfakes domestically.

The Civil Code of China prohibits the unauthorised use of a person’s likeness, including by reproducing or editing it. The Deep Synthesis Regulation, effective from January 2023, more specifically prohibits the misuse and creation of deepfake images without consent. Any company engaging in this behaviour can face criminal charges.

Furthermore, the production, sale or dissemination of pornographic materials – including through advertisements – is illegal in China.

Under president Xi Jinping, China has also passed a raft of laws requiring companies to store data locally and provide it upon request to the Chinese Communist Party. Concerns that China’s government could access data on foreign citizens has fueled the recent controversy over the fate of video-sharing app TikTok in America.

Deepswap is promoted on an English language, Western-facing website, and like similar apps collects its users’ private data. Its privacy policy allows the app to process photos and videos, email addresses, traffic data, device and mobile network information and other identifying pieces of information – all of which is stored in Hong Kong and subject to local requests by courts and law enforcement.

Thibaut said the harvesting of data by apps linked to China could have serious privacy and security implications. “It could be used by these companies to run scams or public opinion monitoring, but it also can be used to identify persons of interest – someone who could work in a secure facility, for example,” she said.

“This fits under an ecosystem where you have private companies that can be two steps removed from former intelligence officers who establish cyber, AI and media companies. And then these firms contract with organisations like the Ministry of State Security or the People’s Liberation Army and gather data for them for specific tasks.”

The 2020 National Security Law, enacted in Hong Kong in the wake of the 2019-2020 protests, gave authorities broad powers to request access to user data for national security reasons, bypassing local privacy laws in other countries.

Rebecca Arcesati, lead analyst at the Mercator Institute for China Studies, said Chinese state security organisations can get directly involved with cases and demand access to data. “This means that both Hong Kong and mainland authorities may access privately-held data of the kind that Deepswap collects, even when stored in Hong Kong.”

Arcesati said the distinction between China’s private sector and state-owned companies was “blurring by the day”. She said China’s official position on data sharing between private companies and the government was that it must be necessary and be based on lawful mechanisms like judicial cooperation. But she added that “there are legal provisions, not to mention extra-legal levers”, that can compel companies to hand over data to security organisations upon request.

Candy.ai

Pop-ups at the bottom of MrDeepFakes have also advertised an app called Candy.ai, crudely captioned with text such as “Generate Your AI Trash Wh**e” and “AI Jerk Off”. The website for Candy.ai claims it allows users to create their own “AI girlfriend”, a phenomenon that has grown increasingly popular in recent years with the emergence of advanced generative AI.

Candy.ai’s inclusion on MrDeepFakes appears to be recent. An archive of MrDeepFakes from Dec. 17, 2024, shows no mention of the web app, while another archive from three days later has a link to the site at the top of the page. This suggests the app was first promoted on MrDeepFakes some time in mid-December.

Candy.ai’s terms of service say it is owned by EverAI Limited, a company based in Malta. While neither company names their leadership on their respective websites, the chief executive of EverAI is Alexis Soulopoulos, according to his LinkedIn profile and job postings by the firm. Soulopoulos was also the subject of earlier reporting in relation to Candy.ai.

Soulopoulos was the co-founder of Mad Paws, a publicly listed Australian company that offers an app and online platform for pet owners to find carers for their animals. Soulopoulos no longer works for the pet-sitting platform, according to a report in The Australian Financial Review, and his LinkedIn says he has been the head of EverAI for just over a year.

Links to Candy.ai were removed from MrDeepFakes following questions from Bellingcat last week.

An EverAI spokesman said it does “not condone or promote the creation of deepfakes”. He said the company has implemented moderation controls to ensure that deepfakes are not created on the platform and users who attempt to do so were in violation of its policies. “We take appropriate action against users who attempt to misuse our platform,” he said.

The spokesman added that the app’s promotion on the deepfake website came through its affiliate programme. “The online marketing ecosystem is complex, and some affiliate webmasters have more than 100 websites where they might place our ads,” he said.

“When we learn that our advertisement has been placed on a website that does not align with our policies or values, we cut ties with the publisher, as we did here with MrDeepFakes. Additionally, we have directed them to remove all material referring or pointing to our platform.”

Affiliate marketing rewards a partner for attracting new customers, often in the form of a percentage of sales made from promoting the business or its services online. According to Candy.ai’s affiliate programme, partners can earn up to a 40 percent commission when their marketing efforts lead to recurring subscriptions and token purchases on the platform.

This is a popular method of online marketing, utilised by companies including Amazon and eBay. It is also a common practice in the gambling world. In 2017, the UK’s Advertising Standards Authority (ASA) upheld complaints against a number of gambling firms in relation to affiliate-placed adverts. In a ruling against one firm that had “maintained the ad had been produced by an affiliate”, the ASA held them responsible as they were “the beneficiaries of the marketing material”.

A Growing Threat

In 2023, the website Security Hero published a report describing the proliferation of deepfake pornography. It found 95,820 deepfake videos online from July to August 2023. Almost all of these videos were deepfake pornography and 99 percent of victims were women.

Increasingly, this is becoming an issue affecting young girls. A 2024 survey by tech company Thorn found that at least one in nine high school students knew of someone who had used AI technology to make deepfake pornography of a classmate.

Bellingcat has conducted investigations over the past year into websites and apps that enable and profit from this type of technology, ranging from small start-ups in California to a Vietnam-based AI “art” website used to create child sexual abuse material. We have also reported on the global organisation behind some of the largest AI deepfake companies, including Clothoff, Undress and Nudify.

Governments around the world are taking varying approaches to tackle the scourge of deepfake pornography. The EU does not have specific laws that prohibit deepfakes but in February 2024 announced plans to call on member states to criminalise the “non-consensual sharing of intimate images”, including deepfakes.

In the UK, the government announced new laws in 2024 targeting the creators of sexually explicit deepfakes. But websites like MrDeepFakes – which is blocked in the UK, but still accessible with a VPN continue to operate behind proxies while promoting AI apps linked to legitimate companies.

MrDeepFakes did not respond to interview requests.

Ross Higgins, Connor Plunkett, George Katz, Kolina Koltai and Katherine de Tolly contributed to this article.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Bluesky here and Mastodon here.